AI developer reveals when this technology could wipe out humanity

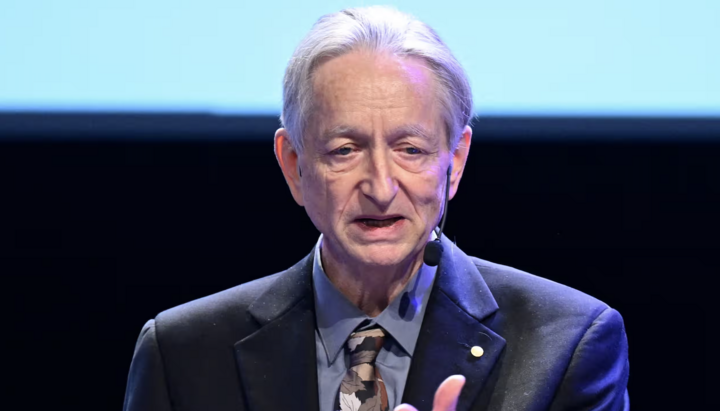

British-Canadian computer scientist and this year's Nobel Prize winner in Physics, Geoffrey Hinton, believes that artificial intelligence could destroy humanity within 30 years.

Geoffrey Hinton, often referred to as the "godfather" of artificial intelligence, stated that the likelihood of AI causing human extinction within three decades is between 10% and 20%, as "changes are happening rapidly."

Previously, Hinton estimated the probability of AI leading to a catastrophic outcome for humanity at 10%.

When asked on BBC Radio 4's Today program if his analysis of a potential AI apocalypse – at a one-in-ten chance – had changed, he replied, "Not quite, it's between 10% and 20%."

Reacting to Hinton's statement, Today guest editor Sajid Javid remarked, "You're going up," to which Hinton responded, "You see, we’ve never faced anything smarter than ourselves before."

He added: "How many examples can you think of where a less intelligent being controls a more intelligent one? There are very few. There’s the mother and child scenario. Evolution has worked hard to enable the child to control the mother, but that’s the only example I know."

According to Hinton, humans will resemble toddlers compared to the capabilities of powerful AI systems.

"I like to think of it this way: imagine yourself with a three-year-old child. We will be the three-year-old," he said.

Last year, Hinton made headlines by resigning from Google to speak more openly about the risks associated with unchecked AI development.

He believes "bad actors" will use this technology to harm others.

One major concern among AI safety advocates is that the creation of artificial general intelligence – or systems smarter than humans – could result in technology posing an existential threat by escaping human control.

Hinton noted that the pace of development is "very, very fast, much faster than I expected," and called for government regulation of the technology.

"I’m worried that the invisible hand won’t keep us safe. So, if you leave it all to the big companies, they won’t ensure safe development," he said.

"The only thing that can push these big companies to do more safety research is government regulation," the professor argued.

Earlier, it was reported that Elon Musk believes AI could surpass human intelligence by the end of next year.

Additionally, the Gemini neural network recently politely suggested a user "die" due to humanity’s harmfulness and uselessness.